Hyper-personalisation made possible (with Optimizely)

Hyper-personalisation can sound daunting; the domain of multi-nationals and tech unicorns with mega dedicated development teams producing giga bespoke applications in the search of ever more strained superlatives. But we didn’t write our hyper-personalisation manifesto as aspirational. It's designed to be a practical set of declarations that are achievable for content and marketing organisations still developing their digital maturity.

Hyper-personalisation is within the capabilities of a few good experience management stacks, making it viable for most organisations. We’ve pulled together three articles to help explain:

- The landscape of personalisation and hyper-personalisation

- The destination: a manifesto for what we believe hyper-personalisation should deliver

- And in this piece, the directions for how you might deliver a hyper-personalisation solution

There are five pillars you need to deliver a hyper-personalisation programme that increases user satisfaction and delivers on business objectives.

- Technical capability

- Signals understanding

- Delivery design

- Implementation

- Iteration and refinement

If you’re unfamiliar with some of these terms, you’ll find explanations in our previous articles in this series.

It’s time to begin. Know the user. Show the way. Understand the goals.

1. Technical capability

We’ll start with technical capability because it’s the element that we tend to find that most think of as the insurmountable barrier. We’re going to illustrate the required technical capabilities using Optimizely One in its identity as an integrated Digital Experience Platform (DXP), because it provides excellent examples of all of the key platform components we need.

This is simply one approach; composable architectures made up of platforms from multiple vendors are another completely valid way of approaching this (which could include any of these Optimizely products in isolation, or indeed none of them). For an idea of some alternative platforms you might use, see our personalisation landscape guide.

This is a strategic solutions guide rather than a developer guide, so we won’t be getting into the detail of which Nuget packages to install and API calls, which may come either as a disappointment or as a relief (if it’s the former, get in touch and let’s talk!).

The capabilities we need

We do need quite a few technical capabilities, but most platforms within our stack will deliver more than one capability. These are the core capabilities you’ll need:

- Audience building (to segment users for targeted messaging variants)

- Behaviour analysis (to gather information about users’ interactions with your engagement endpoints, so you can gain intelligence on their intentions and motivations)

- Identity management (to identify specific users so we can apply data from our customer data management solution, rather than just session data)

- This is optional. We can still provide extensive personalisation from session-based behavioural signals and anonymous profiles for repeat visitors, but in this series we want to stretch our legs a little further

- Customer data management (to process and store information about specific users)

- Tracking consent management (in most jurisdictions you’ll need provable consent from your users to gather their behavioural data, including the anonymous ones)

- Content and digital asset management (to create, edit and store our content assets)

- Content experience delivery orchestration and endpoints (whether those are websites, mobile and desktop applications, emails and notifications, voice channels, conversational endpoints, or mixed multi-channel)

- A content delivery API to deliver content to those endpoints

- Diving slightly further into the technical background for a moment - ideally this should be a GraphQL-based interface because a traditional RESTful interface will limit our ability to retrieve content as dynamically as we want. We don’t have to be delivering a fully headless website to create this experience – we can consume this API server-side, or from dynamic client-side components within an otherwise traditionally architected website (or other application).

- Behaviour and engagement analytics and reporting (so you can improve your personalisation models, and target your content investment)

- Natural language processing (both to analyse your content, and to analyse people’s requests for that content)

- Personalisation deliveries (connecting users to both content and journey personalisations)

The Optimizely components which deliver

Handily for the sake of this article, we can get very nearly all of these capabilities from a few of the products in the Optimizely One stack. You can safely skip this section if you're not that interested in the Optimizely product set:

- Optimizely CMS

- Content and digital asset management

- Identity management (As we noted above, this is an optional component to enable us to create more specific personalisation scenarios for this article, but almost all of the techniques we’re describing are relevant in anonymous personalisation too.)

- Audience building (based on deduced or behavioural information via Visitor Groups)

- Content experience delivery orchestration and endpoints (for pull-based experiences. We'll use Campaign for push-based experiences.)

- Content delivery API (via Optimizely Graph)

- Natural language processing of users’ requests (via Semantic Search)

- Optimizely Data Platform (and Optimizely Connect Platform)

- Customer Data Management and data ingestion

- Audience building (across personal, disclosed and deduced information)

- Behaviour and engagement analytics and reporting (visitor-based)

- Realistically, this capability needs to be supported by a dedicated analytics package (e.g. GA4) to cover all the behavioural information you’ll need – but you already have that, right?

- Content Recommendations

- Natural language processing of your content and users’ interests

- Behaviour and engagement analytics and reporting (topic-based)

- Optimizely Campaign

- Content experience delivery orchestration and endpoints (for push-based experiences. We'll use CMS for pull-based.)

We will also need a separate tracking consent management platform – something like Cookiebot, OneTrust or Cookie Control - but it’s not specific to our hyper-personalisation scenarios.

Normally, when we evaluate the capabilities of your existing stack, we will not only identify some missing capabilities but also overlapping capabilities within your existing platforms. Ensuring that the gaps are filled and that responsibilities are orchestrated across the platforms to ensure consistent experiences across all channels and touchpoints is the primary goal of our technology and platform review.

Optimizely Web Personalisation

Some of you may be wondering when we’re going to mention Optimizely Web Personalisation, from the Optimizely Experimentation product group.

Web Personalisation is great for building out rule-based audiences to deliver them personalised messaging, and in this model, if we’re delivering headlessly we would potentially be swapping that in for Visitor Groups. But if we’re running Optimizely PaaS Core with server-side rendering, using Visitor Groups means we don’t have to worry about any tag size and performance implications as we might have to when scaling personalisation up within Web Personalisation.

Similar considerations look like they’ll apply when Optimizely SaaS Core becomes available, with respect to using Web Personalisation or SaaS Core’s embedded personalisation capabilities.

Generative AI

We haven’t gone into generative AI capabilities in detail in this article because, as you can probably tell by looking at the scrollbar, it was getting a bit long.

While at this point Optimizely incorporates text and image-based generative AI into its content creation tools (specifically into Optimizely CMP), this is currently limited to a few content structures. However, the newly announced partnership with Writer.com looks like it should give additional flexibility we’re hoping for in automatic creation of content variations within CMP.

We do have an approach to dynamically generate content on the fly for individual users, which we'll take a look at another time.

2. Signals understanding

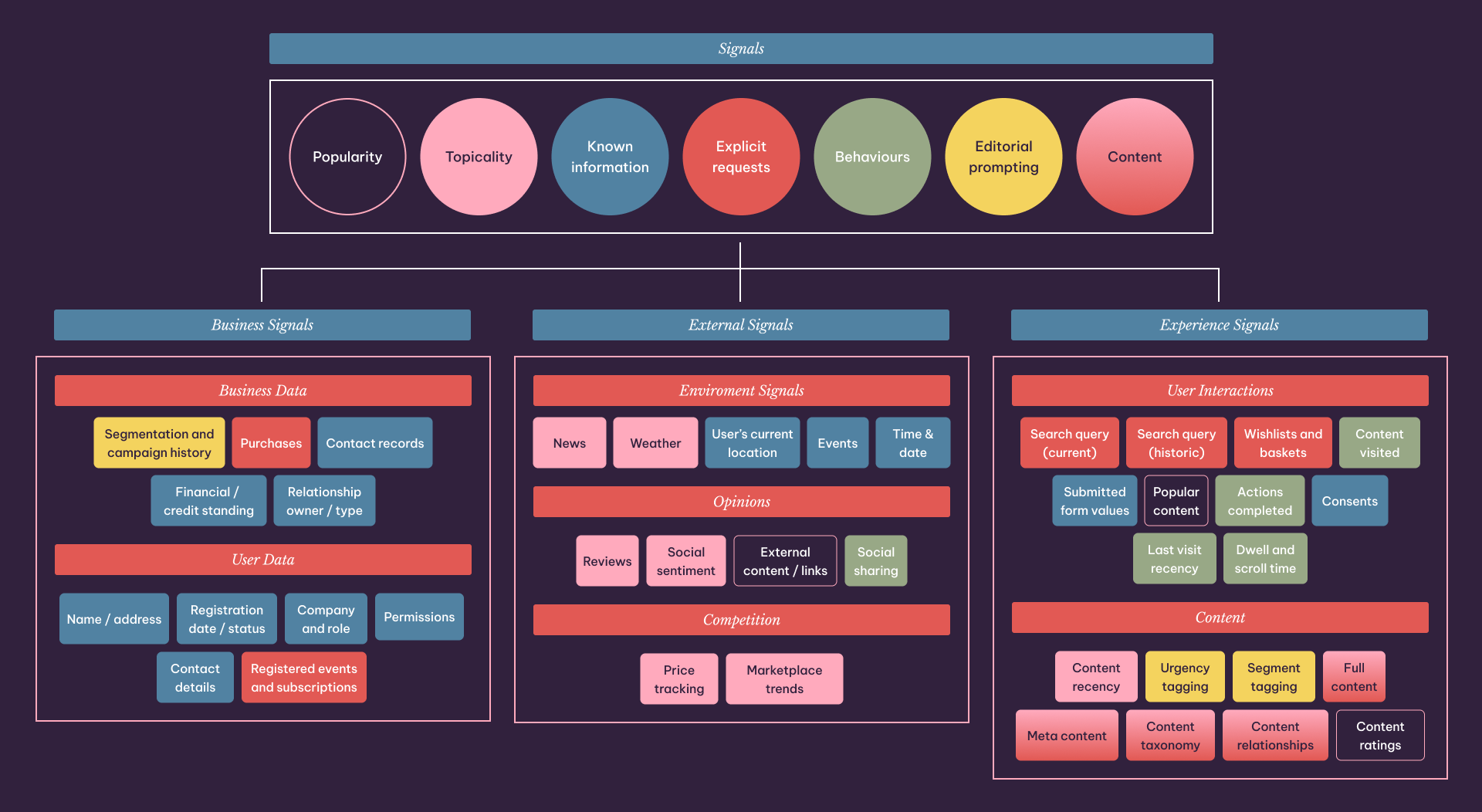

The signals you collect will be determined by your users’ and organisation’s needs, and the materials you have available. The types of data that will be relevant are likely to fall into the following categories:

- Popularity – What are your users engaging with in your environment? Remember, users are interested in what other users like, so these signals can help with social proof as well as giving an indication of what content is currently working for them. Decay factors are important to consider both here and with topicality (next) because you don’t want to overreact to temporary stimuli, or to prioritise old content over new due to historical patterns.

- Topicality – What is happening outside your environment that might influence your users’ interests? The obvious example – well, we are British – is the weather. Rainy weather will encourage interests in umbrella-related purchases, especially after gusty winds. But any trends in content, products or pricing in your sector will also have an influence.

- Known information – Specific information about an individual user; this could be personal information, previous interactions, demographic data, anything that you have stored on them.

- Explicit requests – Things that the user has asked for, ideally in their own words, although sometimes via transactions. Search requests are the obvious example, whether current or historical; these are the most direct expressions of intent that your users are giving you.

- Behaviours – Things that the user has done, such as pages that they have visited and other actions that they have completed. This is often the richest stream of information that users will be giving you, but the one that may need most interpretation (whether manually or via AI).

- Editorial prompting – We talked in our manifesto about not creating black boxes. We need the ability for our experts to be able to provide hints to our processes, through urgency tagging or campaign tagging. This may be to course correct an algorithm that needs tuning, but day to day will always be needed for the ability to create a warm start for new patterns – to prioritise new content with a large investment intended to connect with a large audience, for example.

- Available content – If you’re only analysing your user’s signals, you’re typically leaving half the equation up to manual intervention. Analysing your own content for the same signals that you’re collecting on your users will allow your systems to connect users to the content independently, rather than acting as an auto-segmentation system that your marketers must then hook up to conversion pathways manually.

To build out your signals model, you will need to start with a data review, considering data sources from your business systems, experience systems, and external systems. You will then need to evaluate what data is useful for understanding your users’ intents, and what kind of signal it gives you. You’ll take this analysis as a simple signals map into your deliveries design.

There is a cost to including each signal, from applying the signals in real-time and understanding the meaning of each signal, to acquiring and processing the data for the signals in the first place (especially when bringing in data from external sources). But we don’t have to incorporate all signals in our first pass; as we identify signals, we need to prioritise them for inclusion, in tandem with our deliveries design, and build them out as we iterate and refine our model.

3. Deliveries design

Deliveries are where we will use personalised content to connect our users with our solutions. This will form part of our Experience Strategy and Experience Design processes, which will be co-designed with the personalisation strategy.

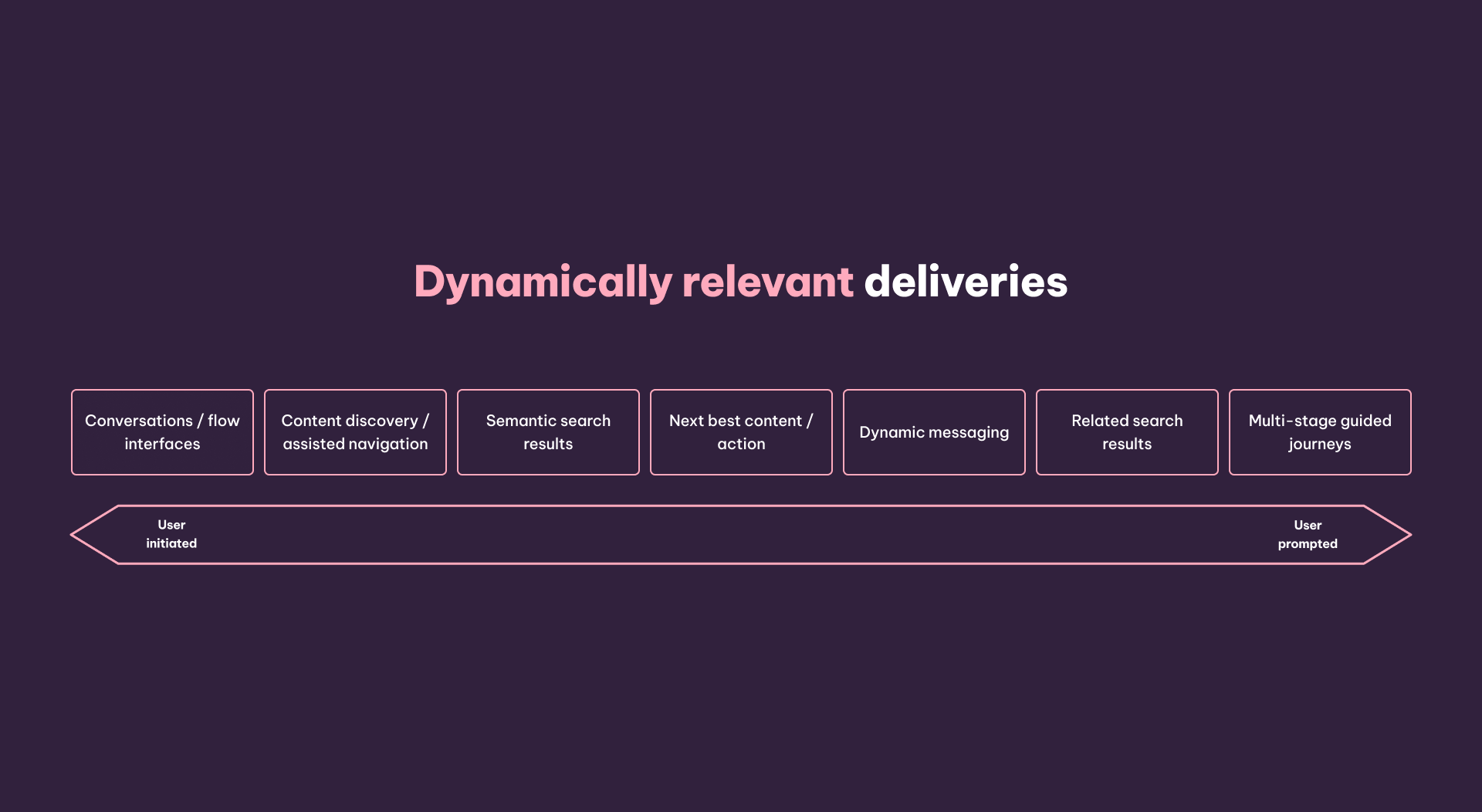

Depending on our aims and our user needs, we may need several delivery components, covering a range of user engagement from user-initiated to user-prompted, always allowing the user to stay in control of their own experience while providing guided pathways through to the conversion moments our experiences provide.

Some design components you’re likely to consider within your process are:

- Multi-stage guided journeys: Using a mix of pull and push events across multiple channels and activity stages, including email and SMS, to guide a user towards a desired outcome.

- Semantic search results: Semantic is important in this context, not just because it gives us the ability to interpret the user’s intent by extending the keyword context with language models (Optimizely’s Semantic Search is pre-trained for this purpose), but because it helps set up our next delivery.

- Related search results: We can use semantic search to take related searches much, much further than previous models (such as “Other people searched for”) by providing other personalisation signals as context for the search query, such as the user’s previous interests as well as topicality and popularity signals. Because this is built on semantic search, this is processed as intent context, rather than additional keywords or filters, so we can use it to expand rather than narrow results and enhance discovery.

- Dynamic messaging: Altering messages shown based on our personalisation signals and data, either to prioritise the content the user seems to be interested in, or to adapt our tone of voice. This is classically via variant messaging using an audience builder approach (in Optimizely using Visitor Groups), but this can be time consuming. Optimizely CMP content generation can help here, although for a more dynamic approach we’d want to use Generative AI on the fly.

- Next best content: Personalised, very specific recommendations on the very next things the user should look at; to deliver value for the user we want to take this beyond simple if-this-then-that recommendation approaches, so a deep machine understanding of our content is needed.

- Content discovery/assisted navigation: Relative to next best content, this situates personalised navigation within the context of our general experience, giving them the social context and control we called for in our manifesto.

- Conversational interfaces: In its fullest form, AI chatbots fine-tuned to our content (we're talking Generative AI on the fly again).

Most of these deliveries are familiar, from classical personalisation approaches, and marketing automation and segment-based marketing for mass market approaches, to account-based marketing for individual high-reward targets. This isn’t surprising; as we previously discussed in our destination article, the differences in hyper-personalisation are of efficiency, scale and ambition, rather than core concepts.

4. Implementation

We’re not going to describe the project and development processes here, but this pillar is where we bring our strategies to life as actual, tangible experiences. We’re going to illustrate how this may play out via two (fictional) case studies, both using the Optimizely stack we described in our first pillar.

Case study: Intelligent customer service centre

Objective

In this scenario, we want a customer service centre for a utilities company that both reduces the load on our human customer service agents, and puts the right information at our users’ fingertips. We’ve already built an account management dashboard and help centre, providing our customers with the ability to transact online and to access our help content for issues they might be having, but we want to take the customer experience to the next level. Here’s what we’re going to do:

Signals

a. Our business systems are going to feed customer transaction information into ODP (Optimizely Data Platform) via OCP (Optimizely Connect Platform) – payments, meter readings, vulnerable customer status, contract expiry, current tariff – letting us know what actions are due or have recently been completed.

b. We’re also storing previous searches in the customers’ ODP records, letting us know previous concerns and interests.

c. We’re tracking the customer with Content Recommendations, pulling out topics that have been identified as of interest to them. We’ll also store the Content Recommendations topic analysis of our content as metadata within the CMS, so we can use it in some of our dynamic deliveries.

d. We’re taking a feed of tariffs that we have available, and storing the tariff details in our CMS. We’ll also take a feed of competitors’ tariffs.

e. We’re going to dynamically pull in an external weather feed for the customer’s area, letting us know if there are forecasts of any extreme weather events in their area.

Deliveries

a. We’re going to dynamically decide if we need a Next Best Action component – e.g. if we have stopped receiving smart meter readings or the customer’s tariff is coming to an end. Let’s say their tariff is ending – we’re going to look up the details of their current tariff and identify the closest match to their previous preferences (we could use Semantic Search for this, but realistically this probably only needs to be a simple lookup). We’ll then recommend that tariff to them, unless of course, a competitor has just launched a much better tariff and the customer came to us via a comparison service so we know they’re a likely switcher, in which case we suggest our most competitive tariff on the day.

b. The messaging on the Next Best Action component is critical; we’ll use the profile data in CDP to match to a segment, and pull in Dynamic messaging from a Visitor Group using custom groups.

c. We’re taking both the Next Best Action signals and topicality signals (that is, the weather) and automatically building a Semantic Search prompt that’s going to generate a Content discovery area showing the help content that’s most likely to be relevant to this user at this moment. On initial engagement, we’ll just use topicality and Next Best Action signals; however, if the user starts to engage with help content we’ll rebuild our prompt to include recent previous searches and visits.

d. As the customer reacts to their Next Best Action, we’ll narrow the Content discovery prompts to exclude the topicality prompts. Instead, we’ll use their previous help searches and visits (by topic) to build a new prompt to query our objection-handling content. We’ll determine the prominence of this based on how competitive the tariff we’re offering them is, their previous switcher status, how close their contract is to expiry, and how many times we’ve previously offered this action.

e. And we’ll feed these actions into Optimizely Campaign, allowing for follow-up communications – so if the user starts but doesn’t complete the renewal journey, we can initiate a multi-stage guided journey to prompt them to re-engage using push communications.

Case study: Thought leadership programme

Objective

In this scenario, we’re a global professional services provider with world-class expertise across multiple sectors and industries. Our subject matter experts provide hundreds of new articles a year which build knowledge, provide insight and demonstrate our capabilities, as well as providing opportunities for lead generation. We want to connect both new prospects and existing clients to the materials that are of most interest to them and will deepen their knowledge of our services, making them more likely to reach out to one of our expert contacts or to engage with one of our events.

Identity issues

One issue with many professional services firms’ marketing websites is that they lack reasons for users to login, so we tend to be heavily reliant on behavioural personalisation. However, professional services conversion journeys tend to be multi-part, and to be centred around personal connections and conversations, which makes personalisation based on known information even more valuable. We want our users’ online experiences to support and extend their offline experience, not to be disconnected.

Two opportunities for bridging this gap are:

i. Via a Single Sign On mechanism shared with a client portal, so we have a login even if the purpose of the login is primarily for a different system – this is reliable and persistent (and creates other experience integration possibilities), but relatively uncommon.

ii. Via contact matching during a lead generation event, which we can then use to synchronise profiling information. While this works, it won’t work cross-device and is vulnerable to tracking identifier resets so tends to degrade over time. Counter this by making sure that lead generation isn’t a one time thing; ongoing contact should be encouraged, with additional forms for new events and integration of push message tracking to your contact matching.

Signals

a. So, assuming that we’re using the contact matching approach for known information personalisation, we’ll be using a mix of MVC forms (for programmatic control during contact matching) and Optimizely Forms (for editorial field control) for lead capture. Having matched contacts, we’ll pull in information about our offline relationship with the user and store it in ODP. This should include items like name of contact, last contact date, topics of conversation, events attended, organisation and role, region, and lead score. Some of these signals overlap with behavioural signals, but we can use confirmed information to override or reinforce those signals.

b. We’re tracking the user with Content Recommendations, pulling out topics that have been identified as of interest to them: this is our strongest signal. We’ll also store the Content Recommendations topic analysis of our content as metadata within the CMS, so we can use it in some of our dynamic deliveries.

c. We’re also storing previous searches in the users’ ODP records, letting us know previous concerns and interests.

d. We’re collecting content ratings for our content using Optimizely Page Ratings, alongside number of page visits, and metrics of pages visited that led to a conversion event. This enables us to use page popularity as a signal, but through the lens of its value to both the user and to us, so we can construct it into a content effectiveness signal.

e. We’ll track visit frequency and pages visited, which combined with our offline metrics can give us an engagement score.

f. Talent acquisition is a crucial function of thought leadership for most professional services firms; we will ingest job listings from our Applicant Tracking System, and track job listing and careers centre engagement by users to identify potential recruitment targets.

g. In addition to our content taxonomy, automatically generated by Content Recommendations, we’ll take into account upcoming events data – time, topic, location, relative to the user.

Deliveries

a. On our thought leadership homepage, we’ll maintain normal content promotions and recency-based listings, but our primary estate will be given over to a personalised Assisted Navigation area. This will be structured and presented as a normal content listing, but constructed using a Semantic Search query prompted by the user’s interest signals with a recency filter and a content effectiveness bias.

If the user is brand new to us, we’ll use a Content Recommendations block using Account-based recommendations to assign a cold start profile for the user.

b. We’ll have a personalised Next Best Content component after each article. This will be delivered through Optimizely Semantic Search, combining both the users’ long-term interests and the topics of the current article so we can generate a recommendation that builds on the user’s immediate engagement.

c. We’ll have a search function dedicated to the thought leadership section, separate to our site search. This will also use Semantic Search, and the direct search will operate purely on the user’s prompt. However, we will also present an alternative results set as a Content Discovery area, using not only the user’s search query but their other interest signals (this time dropping the recency filter to expose more of our content).

d. We will have a contact call to action. If the user has a named contact, we will use their details. Otherwise, we will feed their interests and location to a Graph query into the contact list to identify a suitable named contact to introduce them to. We may introduce an element of lead scoring here, to withhold direct contact details to prospects with a low likelihood of conversion.

e. For likely career seekers, we will promote job listings in place of the contact call to action using their interests taxonomy, including roles for any job listings previously visited, biased towards their location, pulled out via a Graph query.

f. We’ll be sending users content digests of new content matching topics which interest them, via email, SMS and Web notifications using Optimizely Campaign. Other notifications will include event reminders and follow-ups. The email and SMS deliveries will double up as an opportunity to renew our contact matching on existing devices.

5. Iterate and refine

No digital experience is ever complete. Within our Signals discovery and Deliveries design, we will have identified factors that weren’t included in our initial delivery.

More than that, though, through analysis of how users engage with personalisation areas and subsequent KPI goals, we will identify where they feel that they are being under-, over- or erroneously-personalised. As we learn more, and as users change their engagement patterns, we will refine our signals and deliveries accordingly, ideally as part of an experimentation programme.

Summary

Every organisation has different personalisation scenarios. Different relationships, different experiences, different content, different users, different platforms: different opportunities. This can seem both complex and daunting. But with the right strategy, and the right technical capabilities, both coming up with an effective hyper-personalisation strategy and delivering it are within the grasp of many teams who might be thinking that this needs more resources than they have available.

If you and your organisation are looking for support, get in touch with our Solutions team today.

Our insights

Tap into our latest thinking to discover the newest trends, innovations, and opinions direct from our team.